|

|

|

|

|

|

|

|

|

|

|

|

|

|

News[news] (2020.09.12) We have released the code and the test data. [news] (2020.07.03) The paper is accepted by ECCV 2020, we'll release the rest data and code soon. [news] (2020.04.10) We have released the Train and Val set of Trans10K dataset. |

|

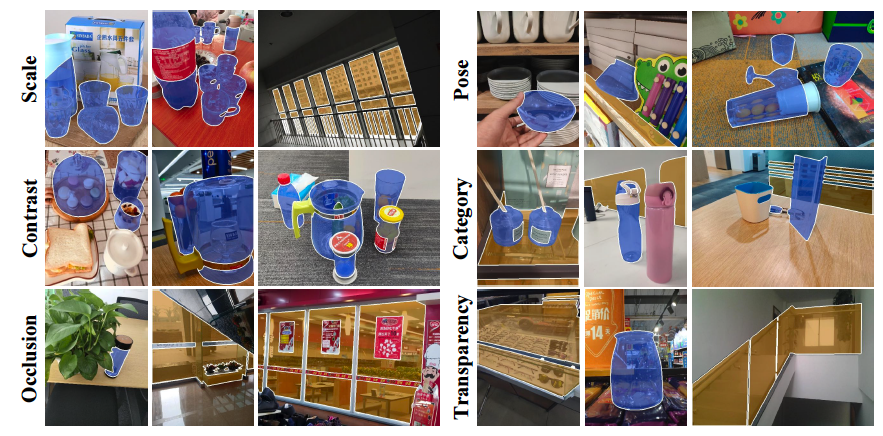

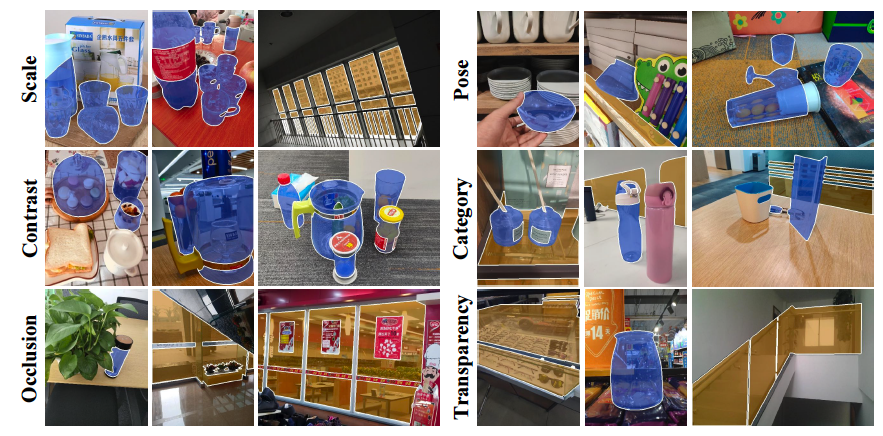

Transparent objects such as windows and bottles made by glass widely exist in the real world. Segmenting transparent objects is challenging because these objects have diverse appearance inherited from the image background, making them had similar appearance with their surroundings. Besides the technical difficulty of this task, only a few previous datasets were specially designed and collected to explore this task and most of the existing datasets have major drawbacks. They either possess limited sample size such as merely a thousand of images without manual annotations, or they generate all images by using computer graphics method (i.e. not real image). To address this important problem, this work proposes a large-scale dataset for transparent object segmentation, named Trans10K, consisting of 10,428 images of real scenarios with carefully manual annotations, which are 10 times larger than the existing datasets. The transparent objects in Trans10K are extremely challenging due to high diversity in scale, viewpoint and occlusion as shown in Fig. 1. To evaluate the effectiveness of Trans10K, we propose a novel boundary-aware segmentation method, termed TransLab, which exploits boundary as the clue to improve segmentation of transparent objects. Extensive experiments and ablation studies demonstrate the effectiveness of Trans10K and validate the practicality of learning object boundary in TransLab. For example, TransLab significantly outperforms 20 recent object segmentation methods based on deep learning, showing that this task is largely unsolved. We believe that both Trans10K and TransLab have important contributions to both the academia and industry, facilitating future researches and applications.

|

Segmenting Transparent Objects in the Wild Enze Xie, Wenjia Wang, Wenhai Wang, Mingyu Ding, Chunhua Shen, Ping Luo Tech Report |

BibTextitle={Segmenting Transparent Objects in the Wild}, author={Xie, Enze and Wang, Wenjia and Wang, Wenhai and Ding, Mingyu and Shen, Chunhua and Luo, Ping}, journal={arXiv preprint arXiv:2003.13948}, year={2020} } |

Acknowledgements |